Stable Diffusion v2.1 and DreamStudio Updates 7-Dec 22

Stable Diffusion v2.1 Release

We’re happy to bring you the latest release of Stable Diffusion, Version 2.1. We promised faster releases after releasing Version 2,0, and we’re delivering only a few weeks later. The Version 2 model line is trained using a brand new text encoder (OpenCLIP), developed by LAION, that gives us a deeper range of expression than Version 1.

Within a few days of releasing SD v2, people started getting fantastic results as they learned some new ways to prompt, and you’ll be happy to discover that 2.1 supports the new prompting style and brings back many of the old prompts too! The differences are more data, training, and less restrictive dataset filtering.

Stable DIffusion v2.1-768

Credit: KaliYuga_ai

Prompt: a portrait of a beautiful blonde woman, fine - art photography, soft portrait shot 8 k, mid length, ultrarealistic uhd faces, unsplash, kodak ultra max 800, 85 mm, intricate, casual pose, centered symmetrical composition, stunning photos, masterpiece, grainy, centered composition : 2 | blender, cropped, lowres, poorly drawn face, out of frame, poorly drawn hands, blurry, bad art, blurred, text, watermark, disfigured, deformed, closed eyes : -2 / Stable Diffusion v2.1-768

When we set out to train Stable Diffusion 2, we worked hard to give the model a much more diverse and wide-ranging dataset, and we filtered it for adult content using LAION’s NSFW filter. The dataset greatly increased image quality regarding architecture, interior design, wildlife, and landscape scenes. But the filter dramatically cut down on the number of people in the dataset, meaning folks had to work harder to get similar results generating people.

Prompt: A Hyperrealistic photograph of ancient Tokyo/London/Paris architectural ruins in a flooded apocalypse landscape of dead skyscrapers, lens flares, cinematic, hdri, matte painting, concept art, celestial, soft render, highly detailed, cgsociety, octane render, trending on artstation, architectural HD, HQ, 4k, 8k / Stable Diffusion v2.1-768

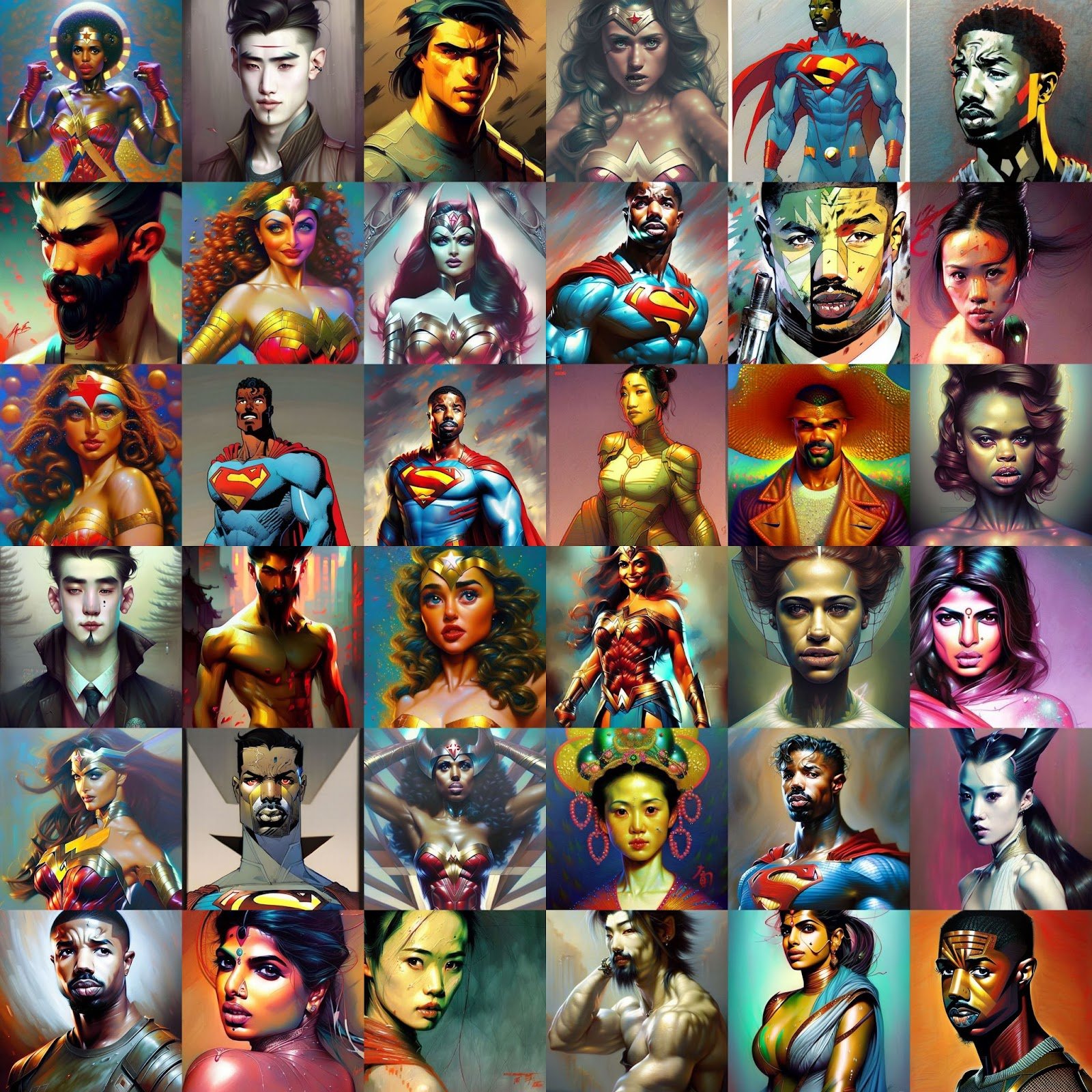

We listened to our users and adjusted the filters. The filter still stripped out adult content but was less aggressive, reducing the number of false positives it detected. We fine-tuned the SD 2.0 model with this updated setting, giving us a model which captures the best of both worlds. It can easily render beautiful architectural concepts and natural scenery yet still produce fantastic images of people and pop culture. The new release delivers improved anatomy and hands and is much better at various incredible art styles than SD 2.0.

Superheroes with Stable Diffusion 2.1

The model also has the power to render non-standard resolutions. That helps you do many awesome new things, like work with extreme aspect ratios that give you beautiful vistas and epic widescreen imagery.

Prompt: A valley in the Alps at sunset, epic vista, beautiful landscape, 4k, 8k / Stable Diffusion v2.1-768 CLIP off

Prompt: A Hyperrealistic photograph of ancient Malaysian architectural ruins in Borneo's East Malaysia, lens flares, cinematic, hdri, matte painting, concept art, celestial, soft render, highly detailed, cgsociety, octane render, trending on artstation, architectural HD, HQ, 4k, 8k

Prompt: A view underwater of colorful schools of fish swimming by a coral reef, professional, 4k, 8k / SD v2.1-768 CLIP off

DreamStudio Updates

Many people have noticed that “negative prompts” worked wonders with 2.0, and they work even better in 2.1.

Negative prompts are the opposites of a prompt; they allow the user to tell the model what not to generate. Negative prompts often eliminate unwanted details like mangled hands, too many fingers, or out-of-focus and blurry images.

You can easily try negative prompts in DreamStudio right now by appending “| <negative prompt>: -1.0” to the prompt. For instance, appending “| disfigured, ugly:-1.0, too many fingers:-1.0” occasionally fixes the issue of generating too many fingers.

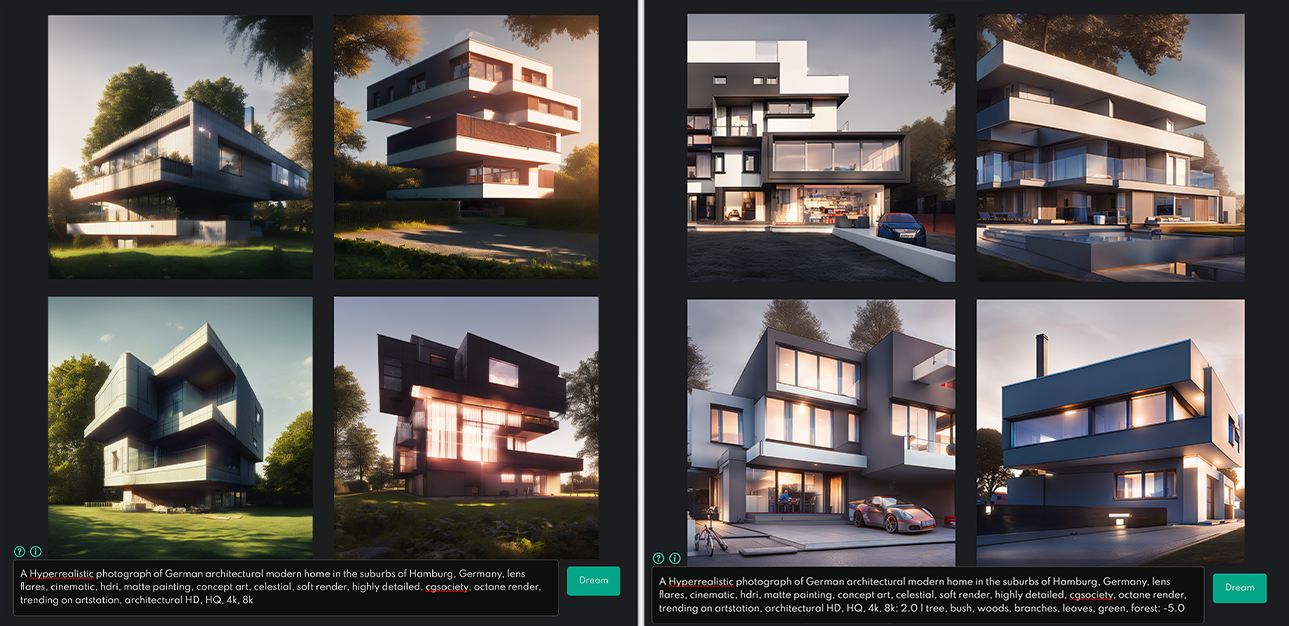

Users can prompt the model to have more or less of certain elements in a composition, such as certain colors, objects or properties, using weighted prompts. Starting with a standard prompt and refining the overall image with prompt weighting to increase or decrease compositional elements gives users greater control over image synthesis.

For example:

Side-by-side comparison of a prompt in DreamStudio without a negative prompt (left), and with a negative prompt (right). In this case the negative prompt is used to tell the model to limit the prominence of trees, bushes, leaves and greenery - all while maintaining the same initial input prompt.

Open Source Release

At Stability we know open is the future of AI, and we’re committed to developing current and future versions of Stable Diffusion in the open. Expect more models, more releases to come fast and furious, and some amazing new capabilities as generative AI gets more and more powerful in the new year.

For more details about accessing the model, please check out the release notes on the Stability AI GitHub.

Also, you can find the weights and model cards here.

View our ongoing project, the Stable Diffusion Prompt Book, online here.

Visit dreamstudio.ai to create a DreamStudio account.

For more information, visit: https://stability.ai/stable-diffusion

Join our 100k+ member community on Discord.

Image Prompt: A hyperrealistic painting of an astronaut inside of a massive futuristic metal mechawarehouse, cinematic, sci-fi, lens flares, rays of light, epic, matte painting, concept art, celestial, soft render, octane render, trending on artstation, 4k, 8k : 2 | blender, cropped, lowres, out of frame, blurry, bad art, blurred, text, disfigured, deformed : -2 / Stable Diffusion v.2.1 with CLIP Guidance ON

Above: the negative prompt is used to reinforce the visual fidelity and style of cinematic science-fiction concept art.

We are hiring researchers and engineers excited to work on the next generation of open source Generative AI models! If you’re interested in joining Stability AI, please get in touch with careers@stability.ai with your CV and a short statement about yourself.